We sometimes have customers tell us they want to run end-to-end tests as often as possible in their development processes — as often as every commit.

When you really care about quality, this might seem like a reasonable idea.

After all, doesn’t the principle of shift left tell us to test as early as possible in the software development lifecycle? To catch bugs and other issues when they’re the least-expensive to fix?

Yes, but the principle very much doesn’t say, “Run end-to-end tests as early as possible in the SDLC.”

That’s because there’s a natural tradeoff between quality and speed: spending extra time on quality checks means you sacrifice some of the velocity of your development and release process.

In this piece, I’m going to make the case that running E2E tests when you’re ready to push a release to your customers — and no earlier in the development process — is key to finding a balance between quality and speed in your development cycle.

The balance between quality and speed

Since both quality and speed are high priorities for performant software teams (and software teams who aspire to be performant), it’s key to find a healthy balance between them. And not err too much in favor of one over the other.

Too much speed inevitably leads to more bugs, more hotfixes, and more tech debt — which all undermine the momentum and productivity of the team. On the other hand, too much focus on (the wrong kind of) quality assurance bottlenecks the release process, distracts your devs from building, and makes you slower and less competitive.

Running end-to-end tests too early and too often in the software development process sacrifices way too much velocity for a minimal return on quality. It’s usually a sign that you haven’t reached that happy balance between speed and quality. I’d go so far as to say it’s an anti-pattern for teams that ascribe to Agile principles.

The good news is: you can operate on shift-left principles, feel confident about your test coverage, and ship fast and frequently (i.e., be Agile).

You just have to use the right kinds of tests at the right times.

The testing pyramid: when to use different types of tests (and why)

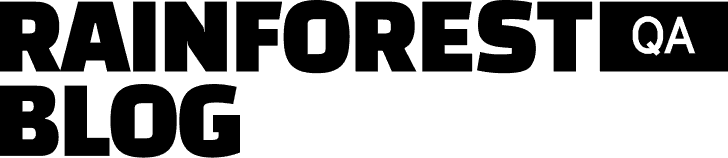

You might be familiar with the testing pyramid:

The testing pyramid communicates one of the core tenets of modern software testing: a balanced testing strategy applies the right kind of testing method to the right job to optimize for efficiency and effectiveness.

There are more granular versions of the testing pyramid with even more layers that reflect even more types of tests (like API tests), but we’re going to focus on the three fundamental types of (usually-automated) tests:

- Unit tests

- Integration tests

- End-to-end tests

Unit tests

The order of layers in the pyramid corresponds with the stages in which different types of testing should happen in your dev process.

The testing pyramid puts unit testing at the bottom layer because unit tests should happen in the earliest stages of development. A unit test interacts with a single component or unit of code to validate its functionality, and doesn’t have any external dependencies.

Since the scope of unit tests is inherently so limited:

- Each unit test is computationally inexpensive and executes very quickly (milliseconds or seconds). So you can run unit test cases as early and often as you want — but at least on every commit on every branch.

- You need many of them for sufficient test coverage, especially relative to larger tests like E2E tests. And it’s ok to have a lot of them because they’re fast and cheap enough to maintain the balance between speed and quality.

These two points are reflected in the size of each pyramid layer. The size indicates both the relative quantity of each type of test you should have, and how often you should run that type of test. Large area = more, small area = less.

End-to-end tests

At the other end of the pyramid, we have E2E tests. An end-to-end test covers an entire critical workflow in your app, like a login flow or an “add a user” flow. Any one of these workflows includes a number of software components, so — by definition — it’d take a number of unit tests to cover what a single E2E test covers.

Except you can’t use a number of unit tests to replace an E2E test, because unit tests operate in isolation. One of the jobs of E2E testing is to verify that individual software components produce the expected results when they interact with one another.

E2E tests are at the top of the pyramid, meaning they come last in the testing process (generally as part of a regression testing suite). And their layer has the smallest size, meaning you shouldn’t have too many of them relative to the number of unit tests and integration tests. (As a rule of thumb, you should only have enough E2E tests to cover your critical frontend application workflows — not the workflows you wouldn’t fix right away if they broke.)

First, you don’t need as many of them for a lot of coverage because they’re inherently broader test cases than unit and integration tests. Plus, you shouldn’t have too many of them because E2E test execution is relatively expensive both in compute and in time.

For example, when our customers run all of their tests in parallel on our platform, they get results back in a few minutes, on average. Our competitors like MuukTest and QA Wolf promise the same results.

A few minutes is pretty fast compared to waiting in line at airport security during the holidays, but it can be disruptive if you’re a developer who’s in the zone and trying to ship. (Just imagine the poor developers running their E2E tests sequentially.)

It’s not like you can context-switch and make meaningful progress on a new task in just a few minutes. It takes time for our minds to adjust to new demands.

For the sake of productivity and velocity, interrupting your developers’ flow is something you want to avoid as much as possible. (Which you already knew.)

Integration tests

But what if you don’t want to wait until you’re ready to release to customers to make sure all your code components are playing nicely together? The answer isn’t to run slow, expensive E2E tests earlier in the process.

Implement integration tests. Integration tests verify the interactions between different individual components of a system, but are limited in scope. So they’re broader than unit tests, but not as long and complex as E2E tests that cover entire workflows.

You don’t have to use special tooling or techniques for satisfactory integration testing. You could even use the same tooling you already use for E2E testing. (That’s what we do — we have UI tests in the Rainforest platform that serve as integration and E2E test cases, respectively.)

The key is to observe the principles of the testing pyramid: have notably fewer tests in your integration test suite than in your unit test suite, and make your integration tests notably shorter than your E2E tests. Instead of covering an entire user workflow like an E2E test, an integration test should only cover a small number of components at a time. This will help you isolate which specific components might be having issues.

Because remember, the goal is to find the right balance between quality and speed. And it’s not a winning move to go overboard with too many long tests too early in your release process. Integration tests can give you confidence that the most critical interactions between your code components are working properly, without the expense of E2E tests.

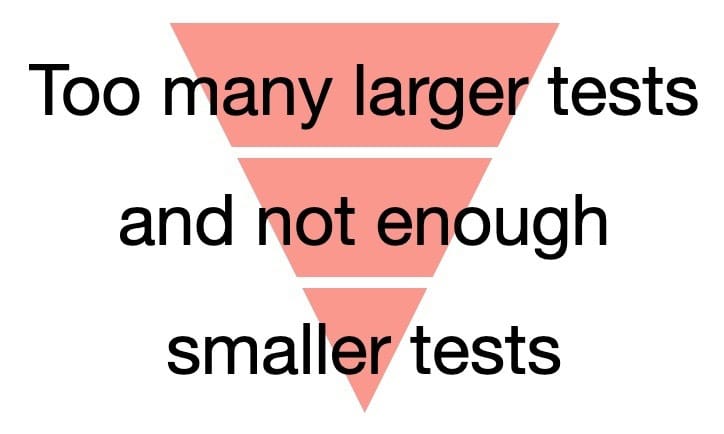

The inverted test pyramid: the risks of running E2E tests too early and too often

The testing pyramid tells us the basic reason why you shouldn’t run E2E tests too early or too often: E2E tests are expensive in time relative to other types of tests, so overusing them can throw off the balance between quality and speed.

As we’ve established, when you run (relatively slow) E2E tests too often, you disrupt the flow of your developers, increase context-switching costs, and literally add to the time spent waiting for tests to complete at each stage of development. (If you’re still using manual testing instead of an automation tool, these problems are multiplied.)

But this doesn’t fully capture some of the nuanced ways in which overly-aggressive E2E testing can disrupt your software development process, velocity, and even quality.

Enter: the inverted test pyramid. The inverted test pyramid is a concept that reflects the too-frequent mistake of running too many large (E2E) tests and too few small (unit and integration) tests.

Here are just two more real-world scenarios that happen when you align your testing operations with the inverted test pyramid:

Flaky tests undermine both velocity and confidence

Sometimes your test environment is underpowered or inconsistent. Often there are tests in your E2E suite that are sensitive to small, intended changes to the app under test.

Either way, all E2E automated testing tools suffer from flaky and brittle tests to one degree or another. These are two different varieties of tests that return false-positive test failures.

They’re the bane of automated testing — flaky and brittle tests don’t uncover legitimate bugs, but still require time-consuming review and maintenance to keep the test suite up to date and reliable. All cost, no benefit.

You can mitigate these issues to some degree. For example, Rainforest uses a unique, patent-pending AI that automatically updates the relevant tests when a small, intended change has been detected in the app under test.

But some amount of false-positive test failures will remain. For the time being (and until AI gets a lot “smarter”), it’s simply a feature of automated testing to some extent.

Running E2E tests too often means unnecessarily creating a situation where flaky or brittle tests inevitably return false-positive failures more often. That means someone has to spend even more time investigating test failures and updating tests, which bottlenecks the development process.

If you use an open source testing framework like Selenium — which is notorious for its brittle tests — the situation is even worse. Your devs or QA engineers will spend a very unhappy amount of time debugging and fixing tests instead of working on the things you want them to be focused on, like building features and adding new test coverage.

Software teams already tend to underestimate the costs of test maintenance — and running E2E tests more often than necessary simply escalates those costs. (Where you could be using faster, lower-maintenance unit tests or integration tests, instead.)

Even if you use a test automation service like Rainforest QA where someone else takes the burden of test maintenance off your internal team, you still have to wait for your test failures to get investigated and addressed before you can have full confidence in the quality of a release. (And, again, you’re unnecessarily keeping those resources from focusing on important tasks like writing test coverage for new features.)

The alternative is deciding to ignore test failures in favor of shipping, which creates an imbalance of favoring speed too much over product quality. And this happens! Because (1) a bunch of notifications about test failures that turn out to be false-positives starts to feel like low-signal noise and (2) devs are incentivized to ship.

Ultimately, ignoring even just a few test failures often leads to a destructive cycle: the results of the E2E test suite are ignored, so the value of the test suite is diminished, so less investment goes into the E2E test suite, making it less reliable. Which makes it easier to justify ignoring the test results. Ultimately, quality is put at risk for the sake of speed.

Debugging takes longer

When a unit test fails, you know exactly which software component isn’t behaving as expected.

But E2E tests are more complex. They inherently test more components — and more relationships between components — at a time, so pinpointing the exact cause of a failure can take longer.

Rainforest provides detailed E2E test results including repro steps, video recordings, HTTP logs, and browser logs to make debugging as easy as possible. But even so, it’ll almost always require more effort to analyze and update a failed E2E test than a unit or integration test.

When you run E2E tests too often, you’re not just inviting more frequent test maintenance onto your team’s plate — it’s specifically test maintenance that tends to be more expensive.

Your E2E testing action plan

End-to-end automated tests are a valuable part of any test plan — they cover the most critical end-user workflows in your application, confirming that all the software components under test are operating and interacting as expected.

But they’re also relatively expensive to run — not just in terms of compute and time, but also in terms of maintenance costs.

So, following the principles of the testing pyramid, run your E2E tests as the “last line of defense” against bugs before you’re ready to release to your customers. (Often, that means testing before you release to your production environment.)

Earlier in the development process, to balance speed and quality, avoid the temptation to (over)use E2E tests and instead implement unit tests and integration tests.